Exploring inspiring possibilities drives innovation

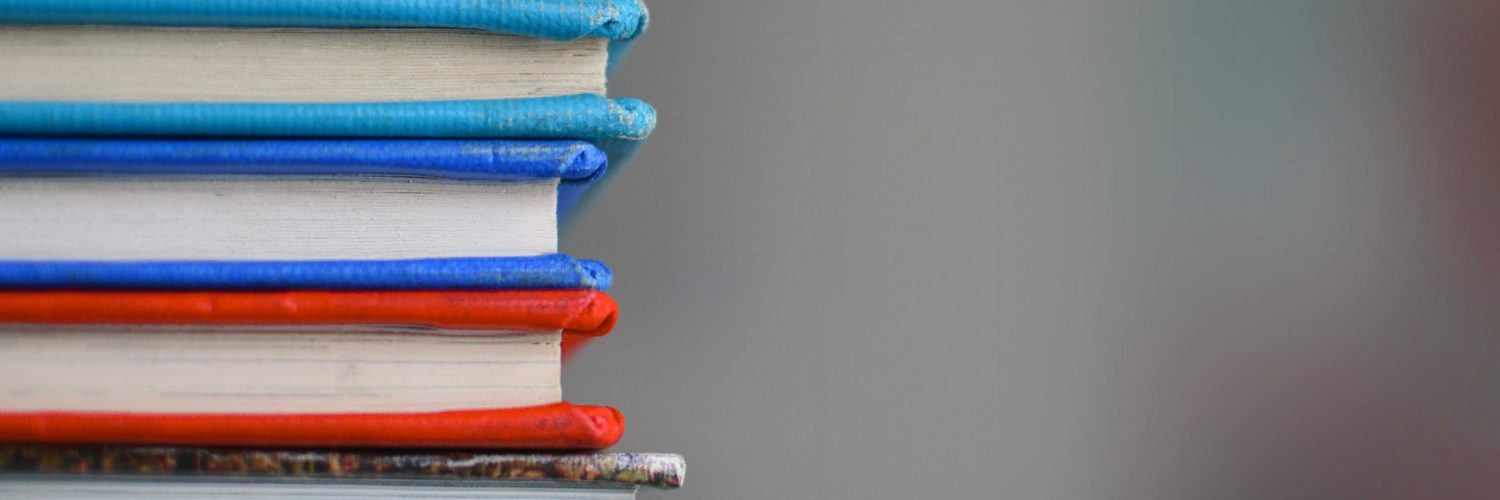

Solitans have a long history of attending events and conferences. That is something which has never been questioned. Attending events and meeting new people, discussions, interactions or just exploring the new environment are the seeds that quite often enable us to reach novel ideas. During the recent couple of years the number of on-site events had been negligible. Currently after the main Covid era a lot of events and summits with real-life interactions have been restored. People are getting together like before. One of those huge events is the annual AWS re:Invent at Las Vegas, NV.

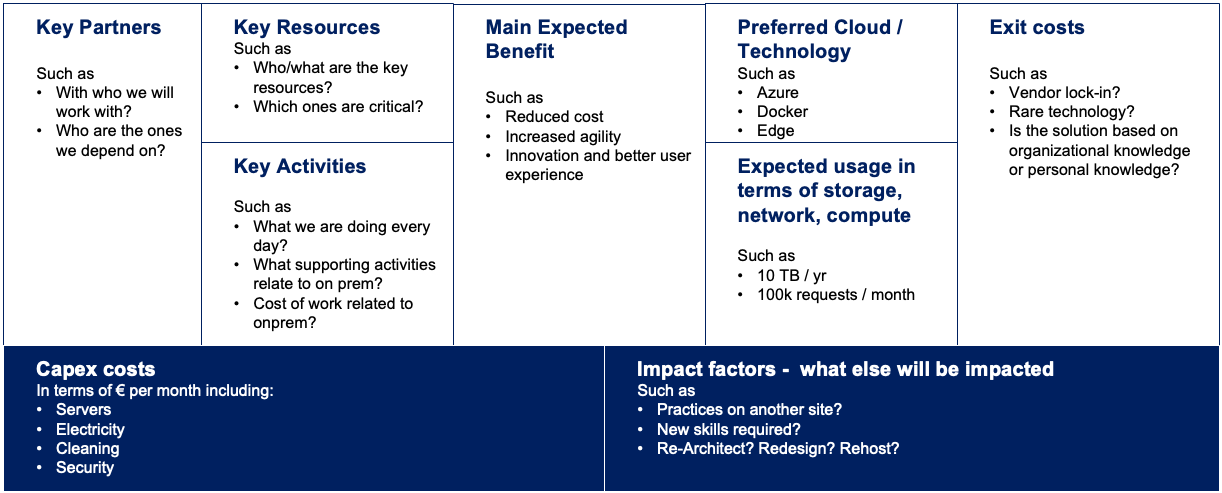

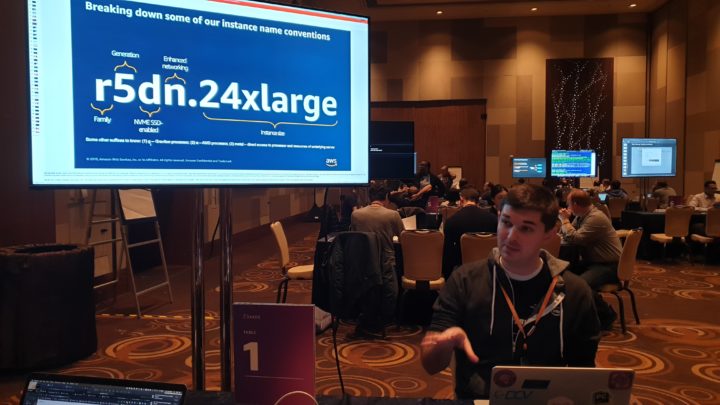

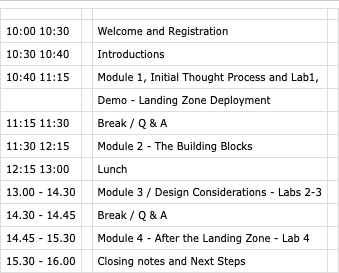

Among many other developers and cloud enthusiastics, we also participated in AWS re:Invent last November. AWS re:Invent was full of sessions, workshops, and chalk talks from all aspects of cloud. If you are interested in the content of the re:Invent, please check Heikki’s blogs (here, here, here and here). Solitans are rather autonomous individuals. That explains why we attended without structured planning mainly on different sessions. Just based on the interest of our own. For example Tero’s curiosity was towards migrations and creation of business value enabled by cloud and Joonas focused on governance and networking.

Key takeaways by Tero and Joonas

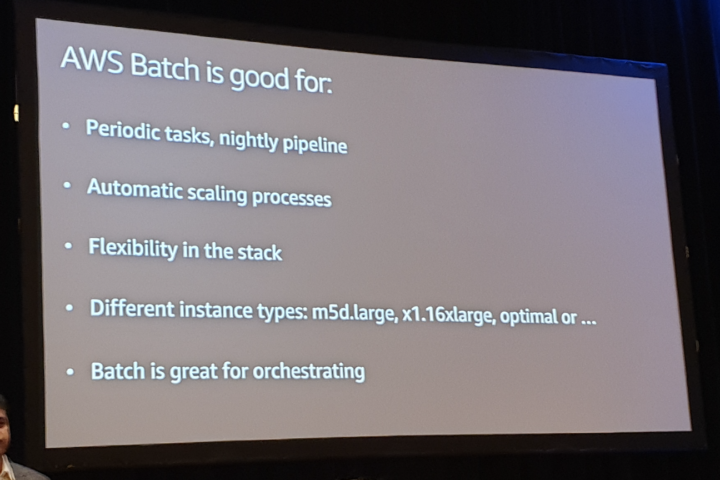

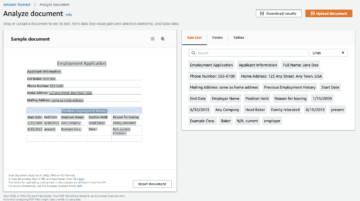

Tero’s interest towards migration was fulfilled with handful workshops. Those addressed widely AWS’s migration services, such as AWS Application Migration Service and AWS Migration Hub. Sessions strengthened the understanding about AWS migration services as a whole. In particularly, those seem to include lots of interesting features that make migration smooth and nice. At least within the workshops’ sandboxes ☺. In addition to the migration related themes, Tero attended sessions on Leadership-track. There he heard inspiring user cases where various kinds of benefits have realized based on cloud transformation.

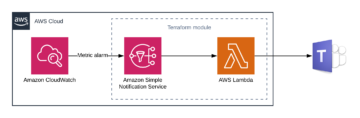

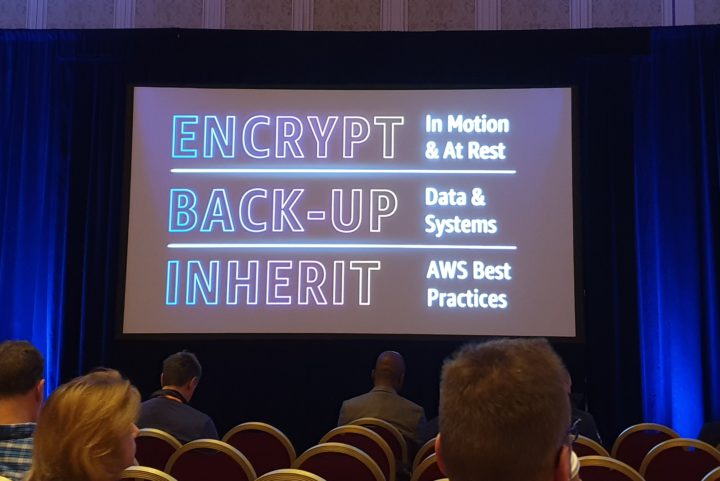

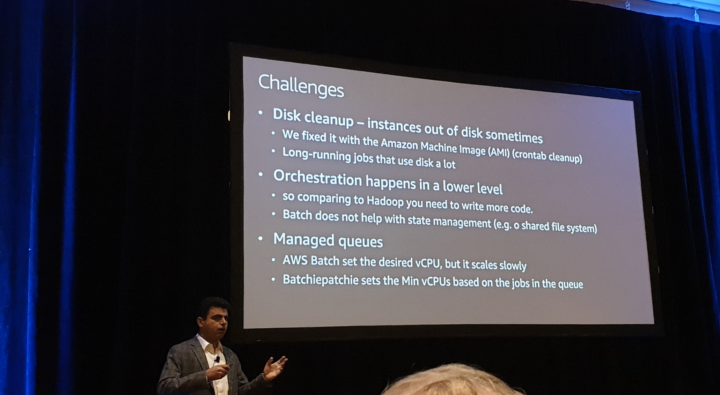

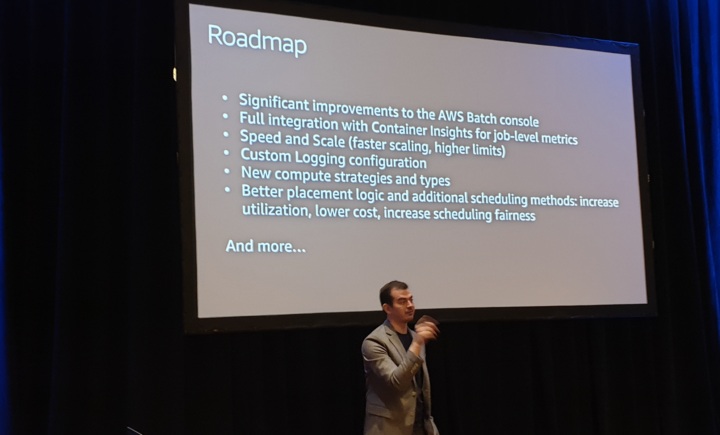

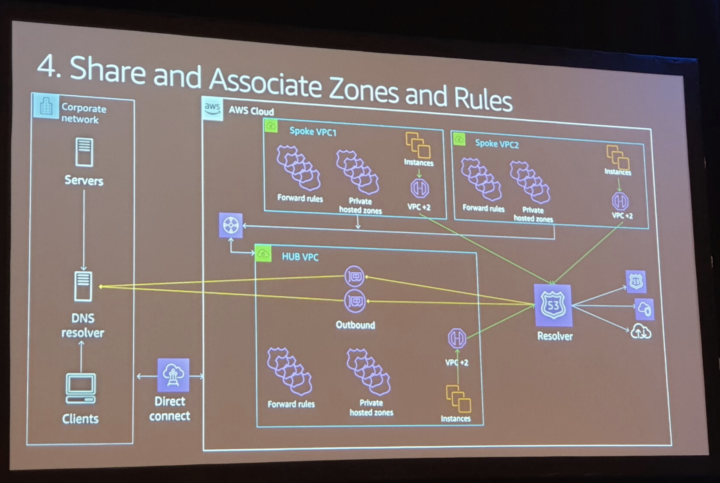

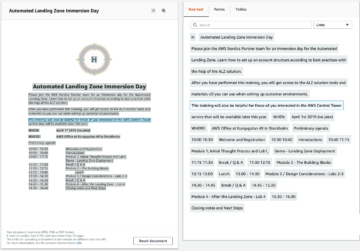

Joonas participated mainly in chalk talks and workshops that provided deeper insights to existing services such as AWS Backup and AWS Control Tower. At re:Invent AWS usually launches new services and features to existing ones. This was also the case this time. The event provided a first glance to some new releases and perhaps the most interesting was VPC Lattice. Also useful features were introduced in sessions, some that might have been released earlier. For governance level some newer AWS Control Tower features have potential. The amount of information gathered in a week was quite overwhelming.

Looking forward to 2023

The variety of interests among Solitans and the possibilities to explore novel ideas are not going to decrease. The current year 2023 is full of interesting events. Naturally the AWS re:Invent will be among the conferences where you can find Solitans. In addition to re:Invent, most likely Solitans can be spotted at almost every main event that address cloud, novel technologies and value creation. We are a rather big group with a variety of interests. Come to say hey to us. We are pretty nice people to hang around with ☺. Solita is a growing company. The growth is based on a combination of people with various backgrounds and passions. It is expected that our curious culture will draw positive attention and more people will join us during the year.

At the moment we have over 1600 colleagues and we are spread over 6 different countries. We Solitans are different but at the same time, we are sharing at least one common aspect. Curiosity. Learning new and evolving by attending, summits, and conferences is very typical for us. These participations are considered as elements that drive us to find the innovative and best solutions for complex situations. We are curious to find solutions that have an impact that lasts.

Check out our open positions.