Is cloud always the answer?

For all applications there will be a moment in their lifecycle that the question whether the application should be modernised or just to be updated slightly. The question is rather straightforward. The answer might not as there are business and technological aspects that should be considered. Having the rational answer available is not easy task. Cloud transformation should have always business need as well as it should be technologically feasible. Many times there might be an interest to make the decision rather hasty and just move forward due to the fact that it is difficult to gather the holistic view to the application. But just neglect the rational analysis because it is difficult might not always be the suitable path to follow. Success in cloud journey requires guidance from business needs as well as technical knowledge.

To address this issue companies can formalize cloud strategy. Some companies find it as an excellent choice to move forward as during the cloud strategy work the holistic understanding is gathered and guidance for the next steps is identified. Cloud strategy also provides the main reason why cloud transition is supporting the value generation and how it is connected to the organisation strategy. However, sometimes the cloud strategy work might be contemplated to be too large and premature activity. In particular when the cloud journey is not really started and knowledge gap might be considered to be too vast to overcome and it is challenging to think about structured utilisation of the cloud. Organizations might face challenges in maneuvering through the mist to find the right path on their cloud journey. There are expectations and there are risks. There are low-hanging-fruits but there might be something scary ahead that has not even have a name.

Canvas to help the cloud journey

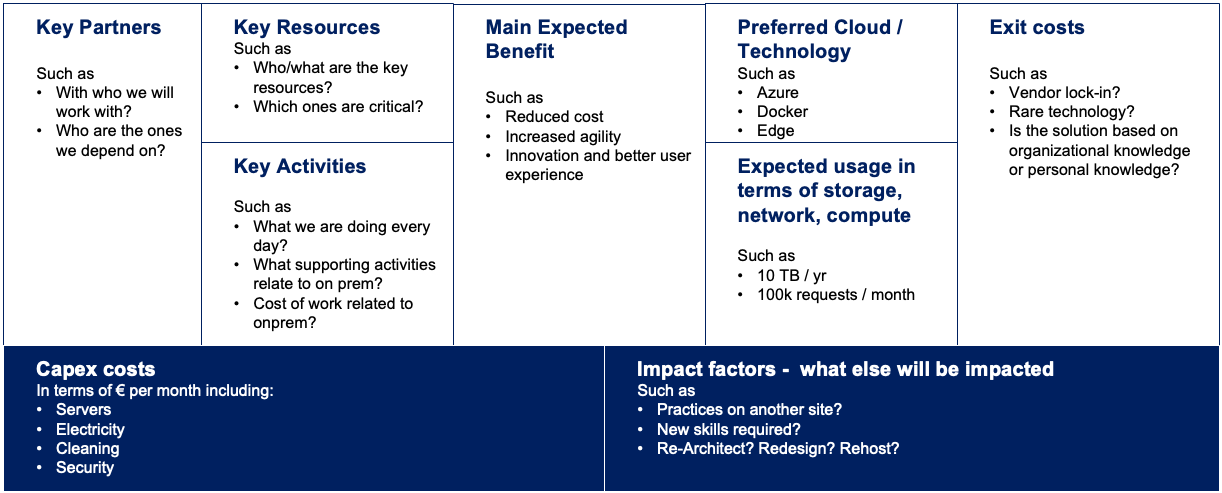

Benefits and risks should be considered analytically before transferring application to the cloud. Inspired by Business Model Canvas we came up a canvas to address various aspects of the cloud transformation discussion. Application Evaluation Canvas (AEC) (presented in figure 1) guides the evaluation to take into account aspects widely from the current situation to expectations of the cloud.

Figure 1. Application Evaluation Canvas

The main expected benefit is the starting point for the any further considerations. There should be a clear business need and concrete target that justifies the cloud journey for that application. And that target enables also the work to define dedicated risks that might hinder reaching the benefits. Migration to cloud and modernisation should always have a positive impact on value proposition.

The left-hand side of the canvas

The current state of the application is addressed with the left-hand side of the Application Evaluation Canvas. The current state is evaluated through 4 perspectives; key partners, key resources, key activities and cost related. Key Partner section advice seeking answers to questions such who are the ones that are working with the application currently. The migration and modernisation activities will impact those stakeholders inevitably. In addition to the key partners, also some of the resources might be crucial for the current application. For example in-house competences that relates to rare technical expertise. These crucial resources should be identified. Furthermore, not only competences are crucial but also lots of activities are processed every day to keep the application up-and-running. Understanding about those will help the evaluation to be more meaningful and precise. After key partners, resources, and activities have been identified, the good understanding about the current state is established but that is not enough. Cost structure must also be well known. Without knowledge of the cost related to the current state of the application the whole evaluation is not on the solid ground. Costs should be identified holistically, ideally not only those direct costs but also indirect ones.

…and the right-hand side

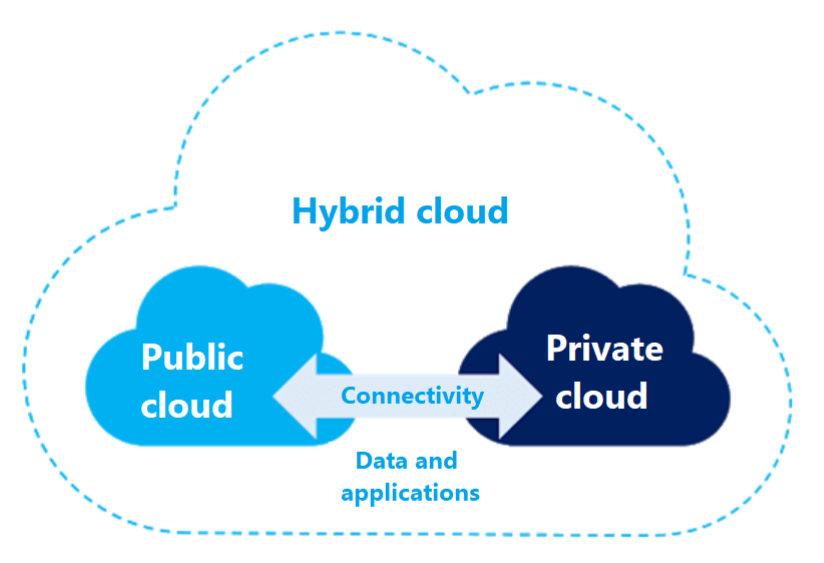

On the right-hand side the focus is on cloud and the expected outcome. Main questions that should be considered are relating to the selection of the hyperscaler, expected usage, increasing the awareness of the holistical change of the cloud transformation, and naturally the exit plan.

The selection of the hyperscaler might be trivial when organisation’s cloud governance guides the decision towards pre-selected cloud provider. But for example lacking of central guidance or due to the autonomous teams or application specific requirements might bring the hyperscaler selection on the table. So in any case the clear decision should be made when evaluate paths towards the main benefit.

The cloud transformation will affect the cost structure by shifting it from CAPEX to OPEX. Therefore realistic forecast about the usage is highly important. Even though the costs will follow the usage, the overall cost level will not necessary immediately decrease dramatically, at least from the beginning the migration. There will be an overlapping period of current cost structure and the cloud cost structure as CAPEX costs won’t immediately decrease but OPEX based costs will start occurring. Furthermore the elasticity of the OPEX might not be as smooth as predicted due to the contractual issues; preferring for example annual pricing plans for SAAS might be difficult to be changed during the contract period.

The cost structure is not the only thing that is changing after cloud adoption. The expected benefit will be depending on several impact factors. Those might include success in organisational change management, finding the required new competences, or application might require more than lift-and-shift -type of migration to cloud before the main expected benefit can easily be reached.

Don’t forget exit costs

In the final section of the canvas is addressing the exit costs. Before any migration the exit costs should be discussed to avoid possible surprises if the change has to be rolled back. The exit cost might relate to vendor lock-in. Vendor lock-in itself is vague topic but it is crucial to understand that there is always a vendor lock-in. One cannot get rid of vendor lock-in with multicloud approach as instead of vendor lock-in there is multicloud-vendor lock-in. Additionally, orchestration of microservices is vendor specific even a microservice itself might be transferable. Utilising somekind of cloud agnostic abstraction layer will form a vendor lock-in to that abstraction layer provider. Cloud vendor lock-in is not the only kind of lock-in that has a cost. Utilising some rare technology will inevitable tide the solution to that third party and changing the technology might be very expensive or even impossible. Furthermore, lock-in can have also in-house flavour, especially when there is a competence that only a couple of employees’ master. So the main question is not to avoid any lock-ins as that is impossible but to identify the lock-ins and decide the type of lock-in that is feasible.

Conclusion

As a whole the Application Evaluation Canvas can help to gain a holistic understanding about the current state. Connecting expectations to the more concrete form will to support the decision-making process how the cloud adoption can be justified with business reasons.