The learning curve of a Cloud Service Specialist at Solita

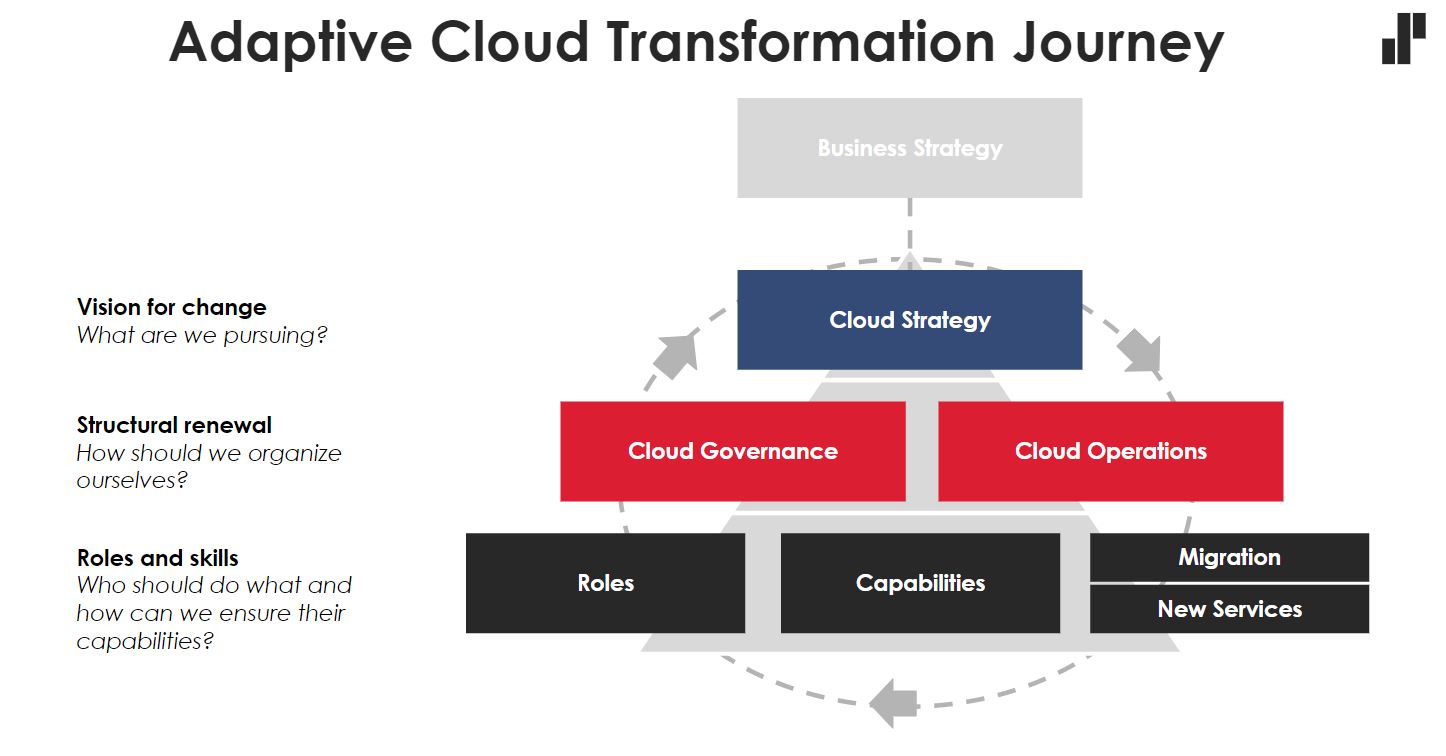

I’ve been working at Solita for six months as a Cloud Service Specialist. I’m part of the cloud continuous services team, where we take care of our ongoing customers and ensure that their cloud solutions are running smoothly. After our colleagues have delivered a project, we basically take over and continue supporting the customer with the next steps.

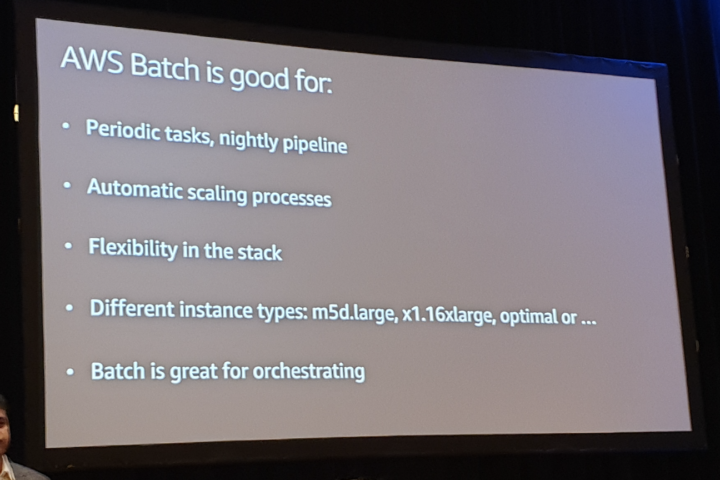

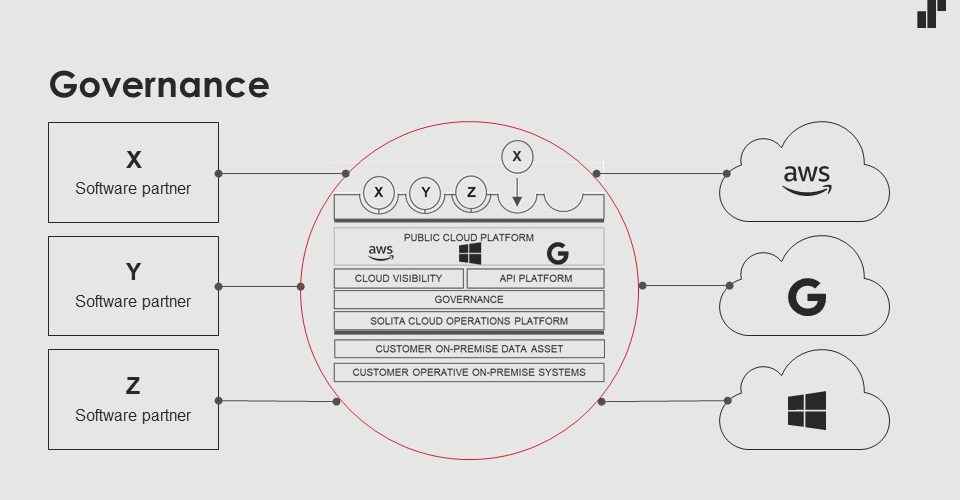

What I like about my job is that every day is different. I get to work and learn from different technologies; we work with all the major cloud platforms such as AWS, Microsoft Azure and Google Cloud Platform. What also brings variety in our days is that we have different types of customers that we serve and support. The requests we get are multiple, so there is no boring day in this line of work.

What inspires me the most in my role is that I’m able to work with new topics and develop my skills in areas I haven’t worked on before. I wanted to work with public cloud, and now I’m doing it. I like the way we exchange ideas and share knowledge in the team. This way, we can find ways to improve and work smarter.

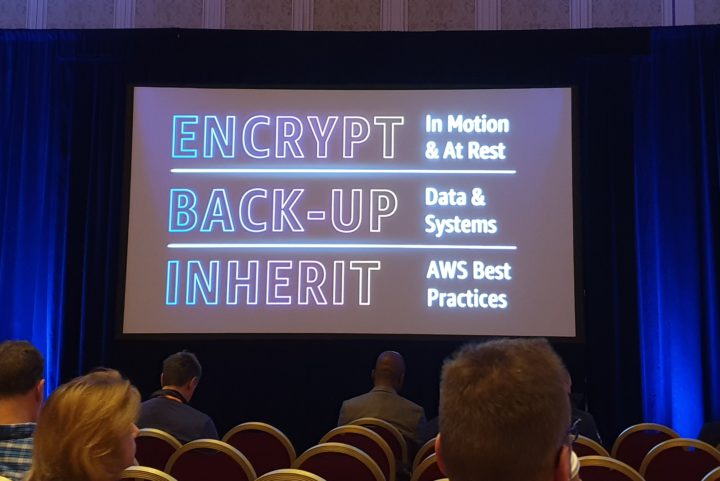

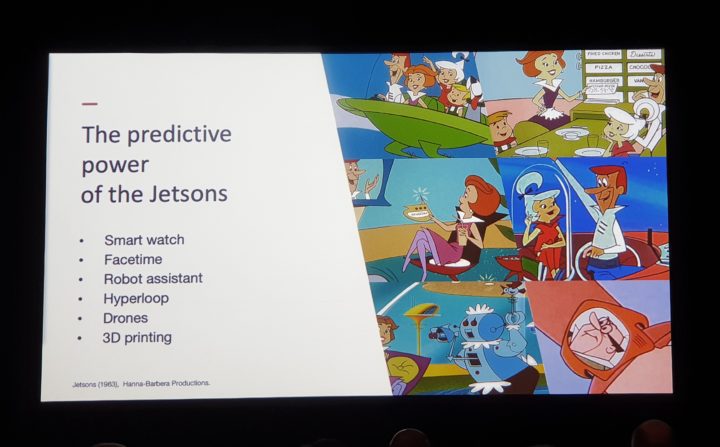

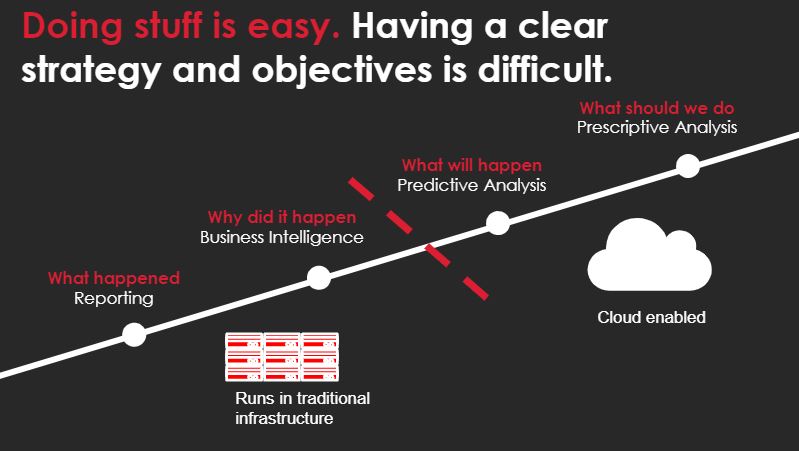

We have this mentality of challenging the status quo positively. Also, the fact that the industry is changing quickly brings a nice challenge; to be good at your job, you need to be aware of what is going on. Solita also has an attractive client portfolio and a track record of building very impactful solutions, so it’s exciting to be part of all that too.

I got responsibility from day one

Our team has grown a lot which means that we have people with different perspectives and visions. It’s a nice mix of seniors and juniors, which creates a good environment for learning. I think the collaboration in the team works well, even though we are located around Finland in different offices. While we take care of our tasks independently, there is always support available from other members of the cloud team. Sometimes we go through things together to share knowledge and spread the expertise within the team.

The overall culture at Solita supports learning and growth, there is a really low barrier to ask questions, and you can ask for help from anyone, even people outside of your team. I joined Solita with very little cloud experience, but I’ve learned so much during the past six months. I’ve got responsibility from the beginning and learned while doing, which is the best way of learning for me.

From day one, I got the freedom to decide which direction I wanted to take in my learning path, including the technologies. We have study groups and flexible opportunities to get certified in the technologies we find interesting.

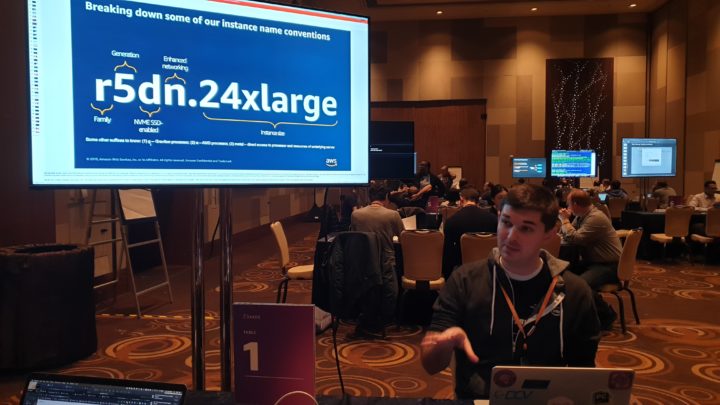

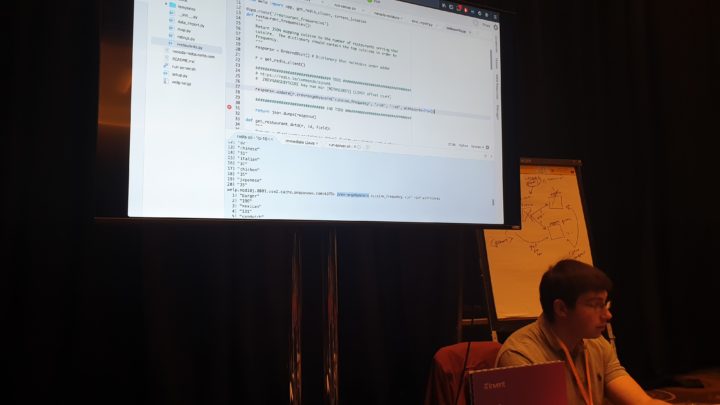

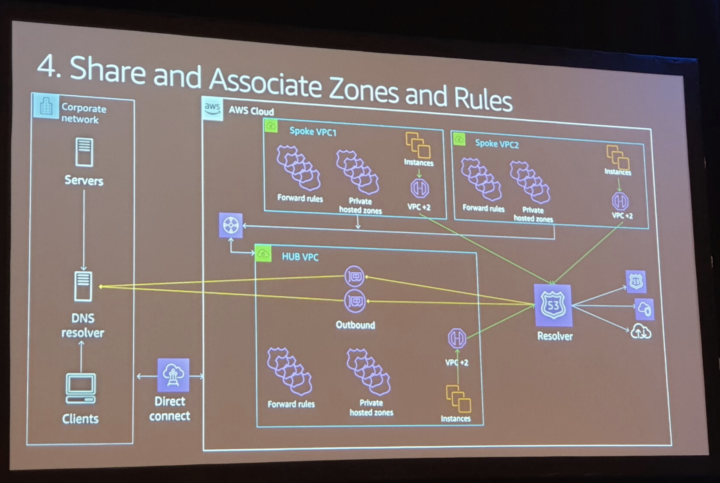

As part of the onboarding process, I did this practical training project executed in a sandbox environment. We started from scratch, built the architecture, and drew the process like we would do in a real-life situation, navigating the environment and practising the technologies we needed. The process itself and the support we got from more senior colleagues was highly useful.

Being professional doesn’t mean being serious

The culture at Solita is very people-focused. I’ve felt welcome from the beginning, and regardless of the fact that I’m the only member of the cloud continuous services team here in Oulu, people have adopted me as part of the office crew. The atmosphere is casual, and people are allowed to have fun at work. Being professional doesn’t mean being serious.

People here want to improve and go the extra mile in delivering great results to our customers. This means that to be successful in this environment, you need to have the courage to ask questions and look for help if you don’t know something. The culture is inclusive, but you need to show up to be part of the community. There are many opportunities to get to know people, coffee breaks and social activities. We also share stories from our personal lives, which makes me feel that I can be my authentic self.

We are constantly looking for new colleagues in our Cloud and Connectivity Community! Check out our open positions here!