Avoid the pitfalls: what to keep in mind for a smooth start with cloud services

Why the public cloud?

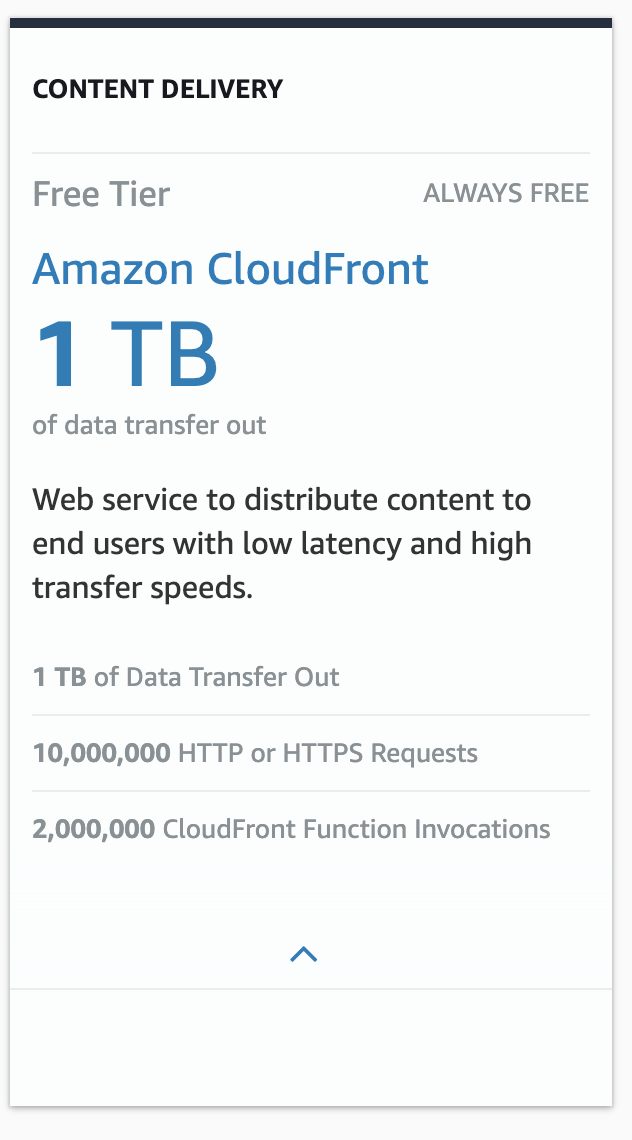

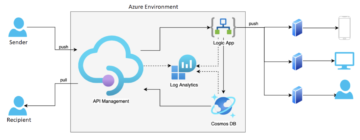

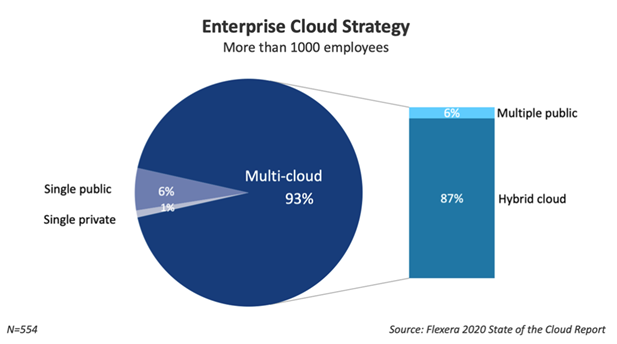

The public cloud provides the ability to scale as needed, to use a variety of convenient SAAS (Software as a Service), PAAS (Platform as a Service) and IAAS (Infrastructure as a Service) solutions, and to pay for the service exactly as much as you use it.

The public cloud gives a company the opportunity for a great leap in development, the opportunity to use various services of a service provider during development, as those accelerate the development and help create new functionality.

All of this can be conveniently used without having to house a personal data centre.

Goal setting

The first and most important step is to set a goal for the enterprise. The goal cannot be general; it must be specific and, if possible, measurable, so that it would be possible to assess at the end of the migration whether the set goal has been achieved or not.

Goal setting must take the form of internal collaboration between the business side and the technical side of the company. If excluding even one party, it is very difficult to reach a satisfactory outcome.

The goals can be, for example, the following:

- Cost savings. Do you find that running your own data centre is too expensive and operating costs are very high? Calculate the cost, how much resource the company will spend on it, and set a goal of what percentage in savings you want to achieve. However, cost savings are not recommended as the main goal. Cloud providers also aim to make a profit. Rather, look for goals in the following areas to help you work more efficiently.

- Agility, i.e. faster development of new functionalities and the opportunity to enter new markets.

- Introduction of new technologies (ML or Machine Learning, IOT or Internet of Things, AI or Artificial Intelligence). The cloud offers a number of already developed services that have been made very easy to integrate.

- End of life for hardware or software. Many companies are considering migrating to the cloud at the moment when their hardware or software is about to reach its end of life.

- Security. Data security is a very important issue and it is becoming increasingly important. Cloud providers invest heavily in security. Security is a top priority for cloud providers because an insecure service compromises customer data and thus they are reluctant to buy the service.

The main reason for migration failure is the lack of a clear goal (the goal is not measurable or not completely thought out)

Mapping the architecture

The second step should be to map the services and application architecture in use. This mapping is essential to choose the right migration strategy.

In broad strokes, applications fall into two categories: applications that are easy to migrate and applications that require a more sophisticated solution. Let’s take, for example, a situation where a large monolithic application is used, the high availability of which is ensured by a Unix cluster. An application with this type of architecture is difficult to migrate to the cloud and it may not provide the desired solution.

The situation is similar with security. Although security is very important in general, it is especially important in situations where sensitive personal data of users, credit card data, etc. must be stored and processed. Cloud platforms offer great security solutions and tips on how to run your application securely in the cloud.

Security is critical to AWS, Azure, and GCP, and their security is invested into much more than individual customers could ever do.

Secure data handling requires prior experience. Therefore, I recommend migrating applications with sensitive personal data at a later stage of the migration, where experience has been gained. It is also recommended to use the help of different partners. Solita has previous experience in managing sensitive data in the cloud and is able to ensure the future security of data as well. Partners are able to give advice and draw attention to small details that may not be evident due to lack of previous experience.

This is why it is necessary to map the architecture and to understand what types of applications are used in the companies. An accurate understanding of the application architecture will help you choose the right migration method.

Migration strategies

‘Lift and Shift’ is the easiest way, transferring an application from one environment to another without major changes to code and architecture.

Advantages of the ‘Lift and Shift’ way:

- In terms of labour, this type of migration is the cheapest and fastest.

- It is possible to quickly release the resource used.

- You can quickly fulfil your business goal – to migrate to the cloud.

Disadvantages of the ‘Lift and Shift’ way:

- There is no opportunity to use the capabilities of the cloud, such as scalability.

- It is difficult to achieve financial gain on infrastructure.

- Adding new functionalities is a bit tricky.

- Almost 75% of migrations take place again within two years. Either they go back to their data centre or they use another migration method. At first glance, it seems like a simple and fast migration strategy, but in the long run, it will not open up the cloud’s opportunities and no efficiency gains will be achieved.

‘Re-Platform’ is a way to migrate where a number of changes are made to the application that enable the use of services provided by the cloud service provider, such as using the AWS Aurora database.

Benefits:

- It is possible to achieve long-term financial gain.

- It can be scaled as needed.

- You can use a service, the reliability of which is the service provider’s responsibility.

Possible shortcomings:

- Migration takes longer than, for example, with the ‘Lift and Shift’ method.

- The volume of migration can increase rapidly due to the relatively large changes made to the code.

‘Re-Architect’ is the most labour- and cost-intensive way to migrate, but the most cost-effective in the long run. During the re-architecture, the application code is changed sufficiently that it can be handled smoothly in the cloud. This means that the application architecture will take advantage of the opportunities and benefits offered by the cloud

Advantages:

- Long-term cost savings.

- It is possible to create a highly manageable and scalable application.

- An application based on the cloud and micro services architecture enables to add new functionality and to modify the current one.

Disadvantages:

- It takes more time and therefore more money for the development and migration.

Start with the goal!

Successful migration starts with setting and defining a clear goal to be achieved. Once the goals have been defined and the architecture has been thoroughly mapped, it is easy to offer a suitable option from those listed above: either ‘Lift and Shift’, ‘Re-Platform’ or ‘Re-Architect’.

Each strategy has its advantages and disadvantages. To establish a clear and objective plan, it is recommended to use the help of a reliable partner with previous experience and knowledge of migrating applications to the cloud.

The other team members have done the groundwork for it already even if there is a technical problem that finally one person solves. It’s the same as when a group of people is trying to light a campfire and the last one succeeds. Without preliminary work from previous people, he probably wouldn’t have succeeded either.

The other team members have done the groundwork for it already even if there is a technical problem that finally one person solves. It’s the same as when a group of people is trying to light a campfire and the last one succeeds. Without preliminary work from previous people, he probably wouldn’t have succeeded either.